In the third and final part of this series I have taken my basic skeleton from the previous two blogs in order to solve the issue of bringing all of the vSAN Skyline Health checks into one central location using a vSAN Health Alarm Check Script.

My two previous blogs can be found here:

NSX Backup Check Script (Using the NSX Web API)

NSX Alarm Check Script (Using the NSX REST API)

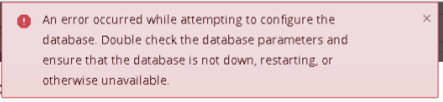

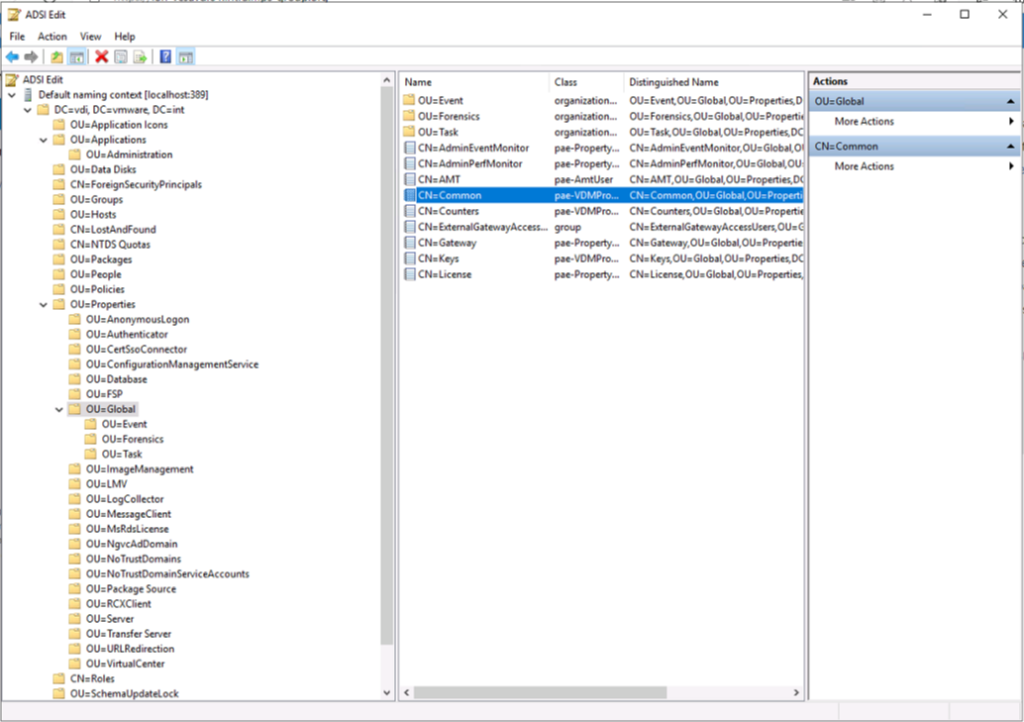

Unfortunately this time, despite best efforts I was unable to get a suitable result using the vCenter REST API. Documentation is lacking and I was not able to get full results for the Skyline Health Checks. From asking around it seems that PowerCLI holds the answer for me, so it gave me an excuse to adapt the script again and get it to work with PowerCLI.

Again you might be asking ‘why not just use the vSAN Management Pack for vROps?’ but alas it does not keep pace with the vSAN Skyline Health and it is missing some alarms.

PowerCLI

For those not aware of everything PowerCLI can do you can find the full reference of the vSphere and vSAN cmdlets here:

https://developer.vmware.com/docs/powercli/latest/products/vmwarevsphereandvsan/

We are going to be using the Get-VSANView cmdlet in order to pull out the information from the vCenter.

The health information we can get with the “VsanVcClusterHealthSystem-vsan-cluster-health-system” Managed Object. Details of this can be found here:

The Code Changes

The Try Catch has been changed to connect to the vCenter first and then call a function to get the vSAN Health Summary

try{

Connect-VIServer -Server $vCenter -Credential $encodedlogin

$Clusters = Get-Cluster

foreach ($Cluster in $Clusters) {

Get-VsanHealthSummary -Cluster $Cluster

}

}

catch {catchFailure}So lets have a look at the function itself.

The Get vSAN Cluster Health function

I have written a function to take in a cluster name as a parameter, find the Managed Object Reference (MORef) for the cluster, and then query the vCenter for the vSAN cluster health for that MORef and output any which are Yellow (Warning) or Red (Critical)

Function Get-VsanHealthSummary {

param(

[Parameter(Mandatory=$true)][String]$Cluster

)

$vchs = Get-VSANView -Id "VsanVcClusterHealthSystem-vsan-cluster-health-system"

$cluster_view = (Get-Cluster -Name $Cluster).ExtensionData.MoRef

$results = $vchs.VsanQueryVcClusterHealthSummary($cluster_view,$null,$null,$true,$null,$null,'defaultView')

$healthCheckGroups = $results.groups

$timestamp = (Get-Date).ToString("yyyy/MM/dd HH:mm:ss")

foreach($healthCheckGroup in $healthCheckGroups) {

$Health = @("Yellow","Red")

$output = $healthCheckGroup.grouptests | where TestHealth -in $Health | select TestHealth,@{l="TestId";e={$_.testid.split(".") | select -last 1}},TestName,TestShortDescription,@{l="Group";e={$healthCheckGroup.GroupName}}

$healthCheckTestHealth = $output.TestHealth

$healthCheckTestName = $output.TestName

$healthCheckTestShortDescription = $output.TestShortDescription

if ($healthCheckTestHealth -eq "yellow") {

$healthCheckTestHealthAlt = "Warning"

}

if ($healthCheckTestHealth -eq "red") {

$healthCheckTestHealthAlt = "Critical"

}

if ($healthCheckTestName){

Add-Content -Path $exportpath -Value "$timestamp [$healthCheckTestHealthAlt] $vCenter - vSAN Clustername $Cluster vSAN Alarm Name $healthCheckTestName Alarm Description $healthCheckTestShortDescription"

Start-Sleep -Seconds 1

}

}

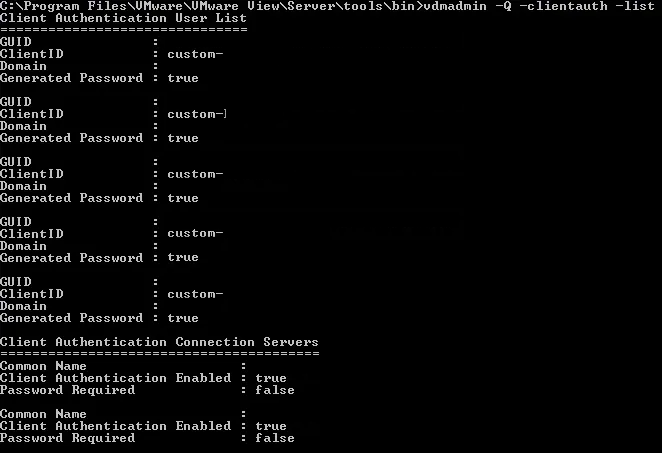

}Saving Credentials

This time as we are using PowerCLI and Connect-VIServer we cannot use the encoded credentials we used last time for the Web and REST API, so we will use the cmdlet Export-CLIxml which allows us to create an XML-based representation of an object and stores it in a file.

Further details of this utility can be found here:

We will use the Get-Credential to bring in the username and password to store and then export it to the path defined in the variables at the top of the script.

if (-Not(Test-Path -Path $credPath)) {

$credential = Get-Credential

$credential | Export-Clixml -Path $credPath

}

$encodedlogin = Import-Clixml -Path $credPath

Handling the Outputs.

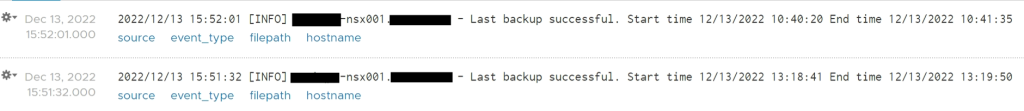

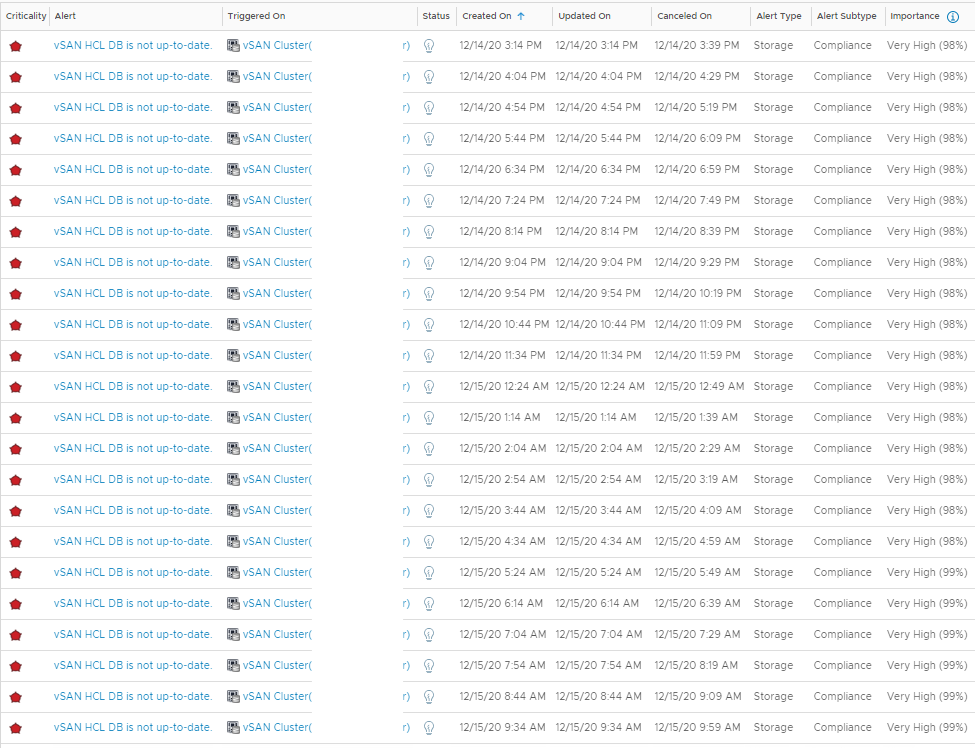

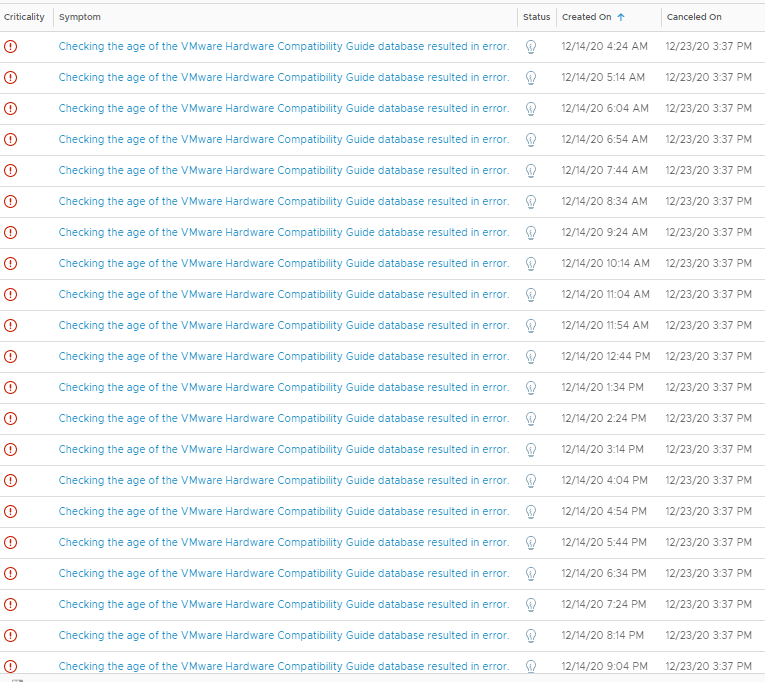

As per my previous scripts the outputs are formatted to be ingested into a syslog server (vRealize Log Insight in this case) which would then send emails to the appropriate places and allow for a nice dashboard for quick whole estate checks.

The Final vSAN Health Alarm Check Script

I have put all the variables at the top and the script is designed to be run in a folder and to have another separate folder with the logs. This was done in order to manage multiple scripts logging to the same location

eg:

c:\scripts\NSXBackupCheck\NSXBackupCheck.ps1

c:\scripts\Logs\NSXBackupCheck.log

param ($vCenter)

$curDir = &{$MyInvocation.PSScriptRoot}

$exportpath = "$curDir\..\Logs\vSANAlarmCheck.log"

$credPath = "$curDir\$vCenter.cred"

$scriptName = &{$MyInvocation.ScriptName}

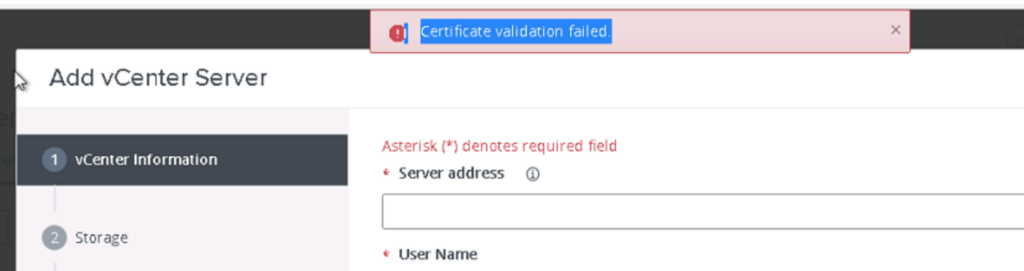

add-type @"

using System.Net;

using System.Security.Cryptography.X509Certificates;

public class TrustAllCertsPolicy : ICertificatePolicy {

public bool CheckValidationResult(

ServicePoint srvPoint, X509Certificate certificate,

WebRequest request, int certificateProblem) {

return true;

}

}

"@

[System.Net.ServicePointManager]::CertificatePolicy = New-Object TrustAllCertsPolicy

Function Get-VsanHealthSummary {

param(

[Parameter(Mandatory=$true)][String]$Cluster

)

$vchs = Get-VSANView -Id "VsanVcClusterHealthSystem-vsan-cluster-health-system"

$cluster_view = (Get-Cluster -Name $Cluster).ExtensionData.MoRef

$results = $vchs.VsanQueryVcClusterHealthSummary($cluster_view,$null,$null,$true,$null,$null,'defaultView')

$healthCheckGroups = $results.groups

$timestamp = (Get-Date).ToString("yyyy/MM/dd HH:mm:ss")

foreach($healthCheckGroup in $healthCheckGroups) {

$Health = @("Yellow","Red")

$output = $healthCheckGroup.grouptests | where TestHealth -in $Health | select TestHealth,@{l="TestId";e={$_.testid.split(".") | select -last 1}},TestName,TestShortDescription,@{l="Group";e={$healthCheckGroup.GroupName}}

$healthCheckTestHealth = $output.TestHealth

$healthCheckTestName = $output.TestName

$healthCheckTestShortDescription = $output.TestShortDescription

if ($healthCheckTestHealth -eq "yellow") {

$healthCheckTestHealthAlt = "Warning"

}

if ($healthCheckTestHealth -eq "red") {

$healthCheckTestHealthAlt = "Critical"

}

if ($healthCheckTestName){

Add-Content -Path $exportpath -Value "$timestamp [$healthCheckTestHealthAlt] $vCenter - vSAN Clustername $Cluster vSAN Alarm Name $healthCheckTestName Alarm Description $healthCheckTestShortDescription"

Start-Sleep -Seconds 1

}

}

}

function catchFailure {

$timestamp = (Get-Date).ToString("yyyy/MM/dd HH:mm:ss")

if (Test-Connection -BufferSize 32 -Count 1 -ComputerName $vCenter -Quiet) {

Add-Content -Path $exportpath -Value "$timestamp [ERROR] $vCenter - $_"

}

else {

Add-Content -Path $exportpath -Value "$timestamp [ERROR] $vCenter - Host Not Found"

}

exit

}

if (!$vCenter) {

Write-Host "please provide parameter 'vCenter' in the format '$scriptName -vCenter [FQDN of vCenter Server]'"

exit

}

if (-Not(Test-Path -Path $credPath)) {

$credential = Get-Credential

$credential | Export-Clixml -Path $credPath

}

$encodedlogin = Import-Clixml -Path $credPath

try{

Connect-VIServer -Server $vCenter -Credential $encodedlogin

$Clusters = Get-Cluster

foreach ($Cluster in $Clusters) {

Get-VsanHealthSummary -Cluster $Cluster

}

}

catch {catchFailure}

Disconnect-VIServer $vCenter -Confirm:$false

Overview

The final script above can be altered to be used as a skeleton for any other PowerShell or PowerCLI commands, as well as being adapted for REST APIs and Web API as per the previous Blogs. Important to note that these will use a different credential store function.

The two previous blogs can be found here:

NSX Backup Check Script (Using the NSX Web API)

NSX Alarm Check Script (Using the NSX REST API)